Google内部每个SRE team,都维护着简洁高效的监控系统。定义简单的规则、快速发现问题、找到问题本质,是监控的基线。尽可能减少监控告警带来的噪音,每个监控项,不仅发现问题,还要找到问题的本因。

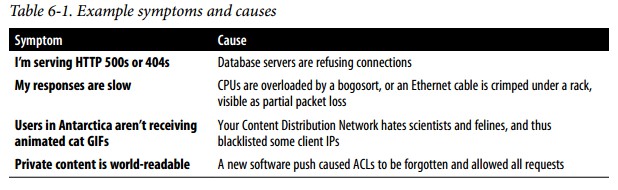

Your monitoring system should address two questions: what’s broken, and why?

The “what’s broken” indicates the symptom; the “why” indicates a (possibly inter‐

mediate) cause. Table 6-1lists some hypothetical symptoms and corresponding

causes.

“What” versus “why” is one of the most important distinctions in writing good moni‐

toring with maximum signal and minimum noise.

Monitoring and alerting enables a system to tell us when it’s broken, or perhaps to tell

us what’s about to break. When the system isn’t able to automatically fix itself, we

want a human to investigate the alert, determine if there’s a real problem at hand, mit‐

igate the problem, and determine the root cause of the problem. Unless you’re per‐

forming security auditing on very narrowly scoped components of a system, you

should never trigger an alert simply because “something seems a bit weird.”

Paging a human is a quite expensive use of an employee’s time. If an employee is at

work, a page interrupts their workflow. If the employee is at home, a page interrupts

their personal time, and perhaps even their sleep. When pages occur too frequently,

employees second-guess, skim, or even ignore incoming alerts, sometimes even

ignoring a “real” page that’s masked by the noise. Outages can be prolonged because

other noise interferes with a rapid diagnosis and fix. Effective alerting systems have

good signal and very low noise.

In general, Google has trended toward simpler and faster monitoring systems, with

better tools for post hocanalysis. We avoid “magic” systems that try to learn thresh‐

olds or automatically detect causality. Rules that detect unexpected changes in end-user request rates are one counterexample; while these rules are still kept as simple as

possible, they give a very quick detection of a very simple, specific, severe anomaly.

Other uses of monitoring data such as capacity planning and traffic prediction can

tolerate more fragility, and thus, more complexity. Observational experiments con‐

ducted over a very long time horizon (months or years) with a low sampling rate

(hours or days) can also often tolerate more fragility because occasional missed sam‐

ples won’t hide a long-running trend.

Google SRE has experienced only limited success with complex dependency hierar‐

chies. We seldom use rules such as, “If I know the database is slow, alert for a slow

database; otherwise, alert for the website being generally slow.” Dependency-reliant

rules usually pertain to very stable parts of our system, such as our system for drain‐

ing user traffic away from a datacenter. For example, “If a datacenter is drained, then

don’t alert me on its latency” is one common datacenter alerting rule. Few teams at

Google maintain complex dependency hierarchies because our infrastructure has a

steady rate of continuous refactoring.